Introduction

Have you ever wondered, precisely, what happens on the Web server when a request for an ASP.NET Web page comes in? How does the Web server handle the incoming request? How is the HTML that is emitted to the client generated? What mechanisms are possible for us developers to work with an incoming request in its various stages of processing? In this article we'll take a rather detailed look at how ASP.NET Web pages are processed on the Web server.

Step 0: The Browser Makes an HTTP Request for an ASP.NET Web Page

The entire process begins with a Web browser making a request for an ASP.NET Web page. For example, a user might type into their browser's Address window the URL for this article,

http://aspnet.4guysfromrolla.com/articles/011404.aspx. The Web browser, then, would make an HTTP request to the 4Guys Web server, asking for the particular file /articles/011404-1.aspx. Step 1: The Web Server Receives the HTTP Request

The sole task of a Web server is to accept incoming HTTP requests and to return the requested resource in an HTTP response. The 4Guys Web server runs Microsoft's Internet Information Services (IIS) Web server. The first things IIS does when a request comes in is decide how to handle the request. Its decision is based upon the requested file's extension. For example, if the requested file has the

.asp extension, IIS will route the request to be handled by asp.dll.  There are numerous file extensions that map to the ASP.NET engine, some of which include:

There are numerous file extensions that map to the ASP.NET engine, some of which include: .aspx, for ASP.NET Web pages,.asmx, for ASP.NET Web services,.config, for ASP.NET configuration files,.ashx, for custom ASP.NET HTTP handlers,.rem, for remoting resources,- And others!

.scott files routed to the ASP.NET engine. The diagram below illustrates the steps 0 and 1 of a request for an ASP.NET Web page. When a request comes into the Web server, it is routed to the proper place (perhaps

asp.dll for classic ASP page requests, perhaps the ASP.NET engine for ASP.NET requests) based on the requested file's extension.

Step 2: Examining the ASP.NET Engine

An initial request for

http://aspnet.4guysfromrolla.com/articles/011404.aspx will reach IIS and then be routed to the ASP.NET engine, but what happens next? The ASP.NET engine is often referred to as the ASP.NET HTTP pipeline, because the incoming request passes through a variable number of HTTP modules on its way to an HTTP handler. HTTP modules are classes that have access to the incoming request. These modules can inspect the incoming request and make decisions that affect the internal flow of the request. After passing through the specified HTTP modules, the request reaches an HTTP handler, whose job it is to generate the output that will be sent back to the requesting browser. The following diagram illustrates the pipeline an ASP.NET request flows through.

OutputCache, which handles returning and caching the page's HTML output, if neededSession, which loads in the session state based on the user's incoming request and the session method specified in theWeb.configfileFormsAuthentication, which attempts to authenticate the user based on the forms authentication scheme, if used- And others!

machine.config file (located in the $WINDOWS$\Microsoft.NET\Framework\$VERSION$\CONFIG directory) and searching for the |

Different ASP.NET resources use different HTTP handlers. The handlers used by default are spelled out in the

machine.config's |

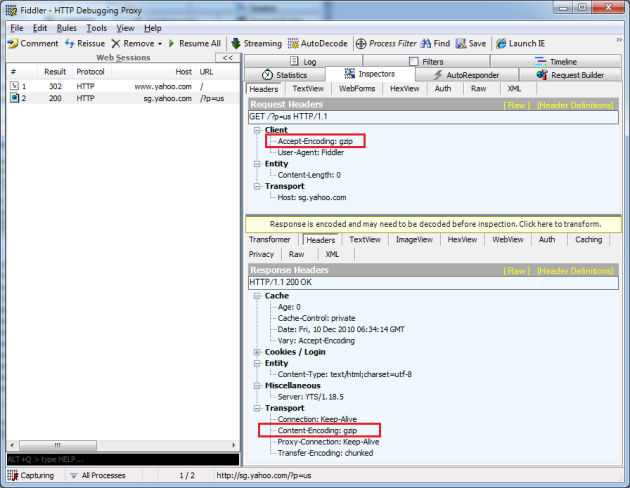

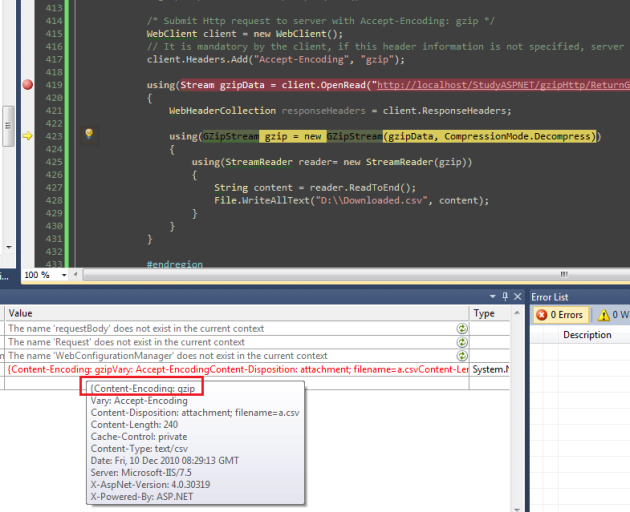

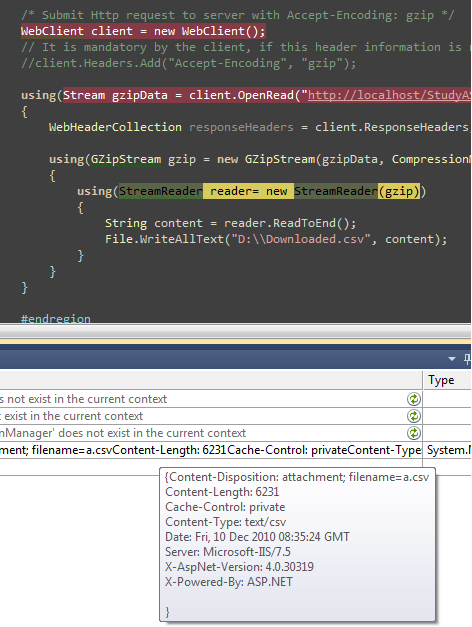

machine.config, or you can add them to a particular Web application by modifying that application's Web.config file. A thorough discussion on using HTTP modules and handlers is far beyond the scope of this article, but realize that you can accomplish some neat things using modules and handlers. For example, you can use HTTP modules to provide a custom URL rewritter, which can be useful for automatically fixing 404 errors to using shorter and user-friendlier URLs. (See Rewrite.NET - A URL Rewriting Engine for .NET for an example of using HTTP modules for URL rewriting.) Another cool use of modules is compressing the generated HTML. For a good discussion on HTTP modules and handlers be sure to read Mansoor Ahmed Siddiqui's article: HTTP Handlers and HTTP Modules in ASP.NET.

Step 3: Generating the Output

The final step is for the suitable HTTP handler to generate the appropriate output. This output, then, is passed back through the HTTP modules and then back to IIS, which then sends it back to the client that initiated the request. (If the client was a Web browser, the Web browser would receive this HTML and display it.) Since the steps for generating the output differ by HTTP handler, let's focus in on one in particular - the HTTP handler that is used to render ASP.NET Web pages. To retrace the initial steps, when a request comes into IIS for an ASP.NET page (i.e., one with a

.aspx extension), the request is handed off to the ASP.NET engine. The request then moves through the modules. The request is then routed to the PageHandlerFactory, since in the machine.config's |

PageHandlerFactory class is an HTTP handler factory. It's job is to provide an instance of an HTTP handler that can handle the request. What PageHandlerFactory does is find the compiled class that represents the ASP.NET Web page that is being requested. If you use Visual Studio .NET to create your ASP.NET Web pages you know that the Web pages are composed of two separate files: a

.aspx file, which contains just the HTML markup and Web controls, and a .aspx.vb or .aspx.cs file that contains the code-behind class (which contains the server-side code). If you don't use Visual Studio .NET, you likely use a server-side